Multi-Class Metrics Made Simple, Part III: the Kappa Score (aka Cohen's Kappa Coefficient) | by Boaz Shmueli | Towards Data Science

Understanding the calculation of the kappa statistic: A measure of inter-observer reliability | Semantic Scholar

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

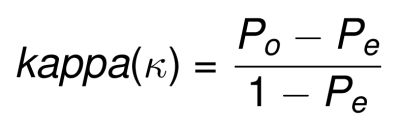

The kappa coefficient of agreement. This equation measures the fraction... | Download Scientific Diagram

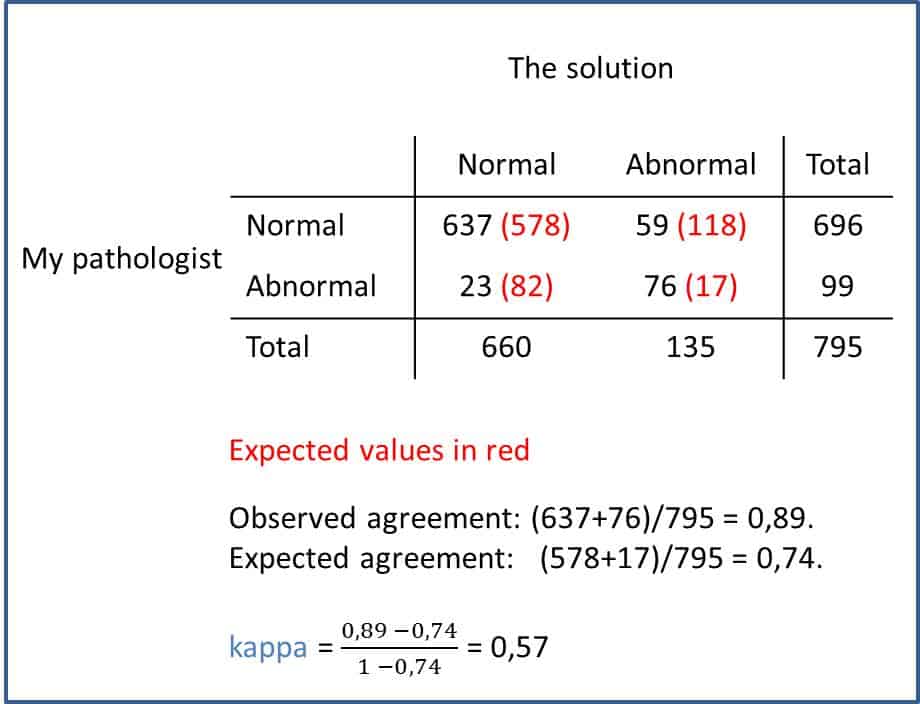

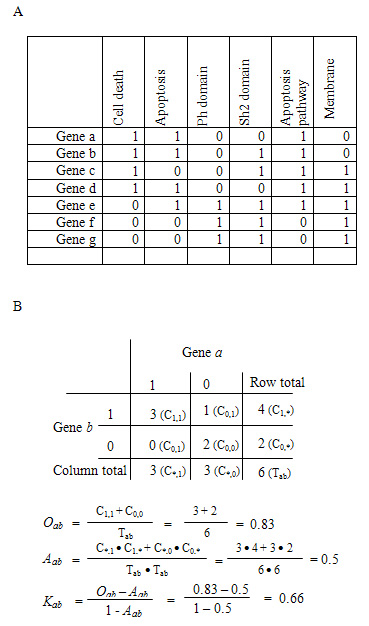

Understanding the calculation of the kappa statistic: A measure of inter-observer reliability Mishra SS, Nitika - Int J Acad Med

GitHub - jiangqn/kappa-coefficient: A python script to compute kappa- coefficient, which is a statistical measure of inter-rater agreement.

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/1-Figure1-1.png)

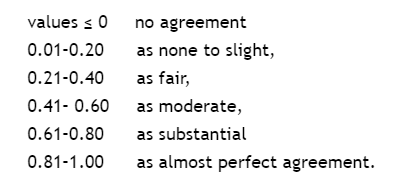

![Suggested ranges for the Kappa Coefficient [2]. | Download Table Suggested ranges for the Kappa Coefficient [2]. | Download Table](https://www.researchgate.net/publication/325603545/figure/tbl2/AS:669212804653076@1536564174670/Suggested-ranges-for-the-Kappa-Coefficient-2.png)